FUTURE FLASH !

You know that feeling after Thanksgiving dinner when you have had all of your favorite foods all at once and are so full that you think you will never eat again. Well, the past week has left my brain like that.

Within twelve days, I attended the SPSS Higher Education Road Show at UCLA, then the Innovations in Medical Education conference in Pasadena, then flew across country to spend four stimulating days at the SAS Global Forum. The SPSS Higher Education road show was small but very interesting. There was an excellent presentation on teaching data mining to business students, another presentation on using SPSS to teach basic statistics, using data the students and their friends enter into surveymonkey. Plus, I had the chance to talk with colleagues in the field, which is always beneficial.

Oddly, while information on sasopedia/ sascommunity, SAS Global Forum and SAS-L is all over the place, SPSS appears to want to keep its road show (excellent!) , academic resources (just beginning, but good) and other web resources secret. After 15 minutes of searching, including on the SPSS web site, I couldn’t find either. Maybe next week I will do a blog on the secret SPSS sites and post some of these links that I have bookmarked on my computer back in California. (Yes, I know I should have bookmarked them on delicious, thank you for bringing it up – not! )

I am finally catching up with this blog and I could spend the next three weeks writing every day on what I have learned, the fascinating people I have met, or caught up with again and thoughts on emerging technology. Let’s start with common trends:

Data mining – this is one of the areas of greatest opportunity for both business intelligence and the growth of scientific knowledge. The data are out there and the tools are out there, whether it is Clementine, from SPSS or SAS Enterprise Miner . We have the ability, far more than we are using at present, to determine who is likely to purchase information, who is likely to constitute a terrorist threat and which person is likely to engage in binge drinking. Why aren’t we using this information?

Lack of technically and scientifically competent people – (Hint: The answer is NOT to have more visas to hire foreign engineers and scientists.) At every conference I attended, there was a discussion of the need for more people knowledgeable about new technology, more people who understood the basic science and mathematics that underlies everything from diseases to parallel processing. Hiring staff from other countries doesn’t increase the number of available personnel, it just moves them around, sometimes switching the focus of a biology major from stemming the spread of tuberculosis in her home country of India to making the tastiest formula for twinkies in the United States. There are a whole lot of reasons why that is not a good thing. Just one of them is that it does not address our national problem that we are not producing enough people in our own schools who are interested and prepared to undertake basic and applied research on cancer, Alzheimer’s, the stock market, preventing alcohol abuse, identifying terrorist threats or why other people (but not me) lost 40% of their 401(k). On top of all of this is the scary notion of “statistics without math”. We have people who can get the software to work by following rules they have memorized but don’t really know what they are doing. It’ s sort of like having someone who cannot read road signs or maps drive across country with a GPS. As long as the GPS works, there are no roads closed due to construction or bad weather, and the area they are going to is not so new it doesn’t show in the GPS, they are fine. Once anything out of the routine happens, though, you’re screwed.

GUI is in, even if you don’t know what GUI is. GUI is graphical user interface, and it is what SAS Enterprise Guide has, for example, or the SAS Forecasting Product and most of the newer offerings from both SAS and everyone else.

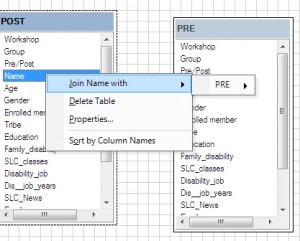

SAS WITH GUI MERGING PRE & POST DATA

For example, this image combines two SAS datasets using Enterprise Guide. This is actually how it is done with the pointing and the clicking and the menus dropping down. Previously, this would have been done as follows:

SAS WITHOUT GUI MERGING PRE & POST DATA

Libname mydata “c:\annmaria\data” ;

proc sort data = in.pre ;

by name ;

proc sort data= in.post ;

by name ;

data in.alldata ;

merge in.pre in.post ;

by name ;

While I found this a perfectly acceptable way of doing things, sad to say, the world is comprised of people who are not me. (There will be a brief pause while I mourn this unfortunate state of affairs.) Now I may seem to be contradicting myself when I say that we need people who understand the underlying mathematics and logic of data analysis and then I say that the move to GUI is a good. First of all, I didn’t say it was good, more like my children growing up, is simply inevitable. Whether it is good or not is a moot point. Second, there is nothing keeping anyone from using Enterprise Guide or Forecast Server in extremely sophisticated ways. I don’t mean to imply that one needs to be able to transpose covariance matrices in their heads or build a nuclear reactor out of wood to interpret an Analysis of Variance. I do, think, however, that you should know that one cannot do an Analysis of Variance when all of your variables are categorical. (“Are you sure? Someone asked me recently, having already collected their dissertation data.” Yes. I am sure. Very, very sure. If you laughed at this, seriously, you need to sell your slide rule and buy a life.)

The next two big things are text mining and social network analysis. If you combine textual analysis software with data mining and social network analysis and know what the hell you are doing (note all of my points above converging here), you could get some predictive power akin to science fiction. This was all brought together in a fantastic presentation co-authored by Howard Moskowitz.

who is among other things, the author of Selling Blue Elephants, and fifteen other books and president of Moskowitz Jacobs.

Speaking of which, this illustrates yet another one of the amazingly cool things about conferences like these. After his presentation, I had a few questions about data modeling, and he was gracious enough to ask where I worked and where I attended graduate school. As people often do, he started naming people who had been his professors, well-known in the field, who were at USC or at UC and asking, “Well, have you heard of -”

After one or two who I remembered and several who drew a blank, I finally gathered as much in the way of manners as I could muster (which if you met me, you would know is not much) and said,

“Well, sir, the one I have really heard of is you !”

AnnMaria – I share your enthusiasm about data mining, and also agree with you that text mining and social network analysis are certainly two big important areas of the future. Here are two fun notes about these trends:

— Social Network Analysis: Wow, there is suddenly a LOT of interest in this in data mining circles. It’s not just the masses using Facebook and LinkedIn. I’ve been on the organizing committees of the last few KDD conferences. At KDD-2007 we had a few social network papers… but then at KDD-2008 we had a lot more, and the interest in them among the attendees was overwhelming. On the second day we had a panel on social network analysis, and we had to scramble to move it to a much bigger room. I’m glad that we didn’t have time to move in all the chairs – because even in the bigger room, we had a crowd standing in the back and overflowing from the doors. There was incredible buzz in the room about what was becoming possible in social network analysis. Some panelists were proposing that most interesting human phenomenon have a social component, other simplier types of analysis could really be considered to be a restricted (reduced) form of social network analysis in which the network was reduced to 1 person acting in isolation.

— Text mining: The tools for this are really getting better and better, and computing power really helps too. I see this at both ends of the user spectrum: E.g., there are the highly customizable tools like SPSS Clementine Text Mining, or tools that make it very simple for the user – like the very smart default values and processing power of Oracle Data Mining (which now lets you select a text field like “customer comment” and use it as an input variable in you predictive model). Both look like magic compared with what was available just a few years ago. I think we’re going to see great advances in text mining in the coming years. But like AI, there’s also a danger that early enthusiasm can mask just how hard human text (and speech) really is. Truly extracting topics, sentiment and subtle meaning from text is very tough. Getting 60% of it right can have enormous business value, but getting the remaining 40% will take a long time and lots of effort.

Great blog – keep it going!

— Karl

Nothing profound to add at the moment. Loving your blogg and will recommend it to all my PhUSE pals. http://www.phuse.eu

The GPS analogy was fantastic! I think it will encourage debate. There seem to be cluster of people around both extremes 1. Providing apps that allow data mining to non-statisticians is like giving a chainsaw to a child without instruction and protective clothing. 2. Giving the apps is like removing cataracts from a blind person.

I think your GPS analogy could provide a more sensible debate.