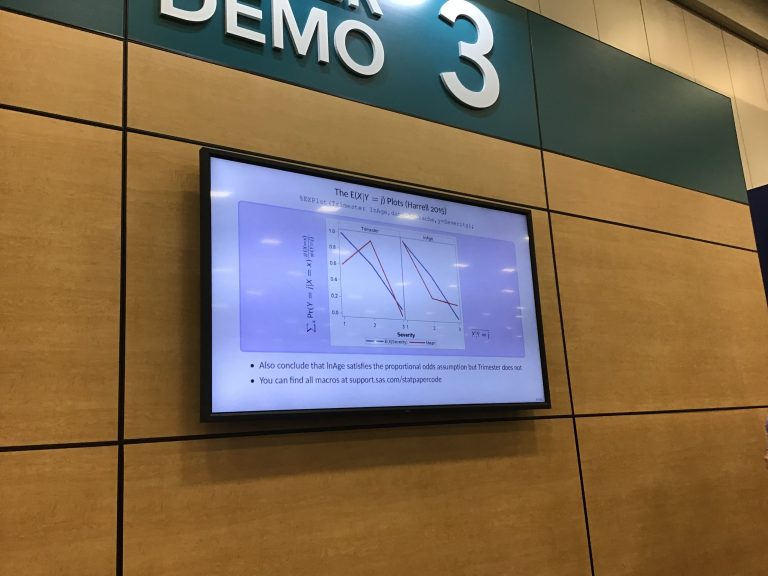

30 Things I Learned in 30 Years as a Statistical Consultant – Part 1 of lots

Never fear, I’m not going to post all 30 things in this post. This is a series. A LONG series. Get excited. I was invited to speak at SAS Global Forum next year and it occurred to me after thinking about it for 14.2 seconds that there are plenty of people at SAS and elsewhere…