Why won’t SAS see this character variable is equal?

When I selected observations where the character variable was equal to a certain value, SAS returned 0 observations – but I knew there should be a match!

When I selected observations where the character variable was equal to a certain value, SAS returned 0 observations – but I knew there should be a match!

A twitter storm erupted recently in response to one person’s thread about how to find a 10x engineer . Since I started programming FORTRAN with punched cards back in 1974, was an industrial engineer in the 1980s and now run a software company, I’ve worked with a few people, rightly or wrongly considered to fall…

I had more than the two tips on becoming a better programmer than I gave in the last post but I had run out of margarita. Now, being replenished with tequila and fresh lime by The Invisible Developer, here are two more. He often tells me that I should refer to myself as a developer…

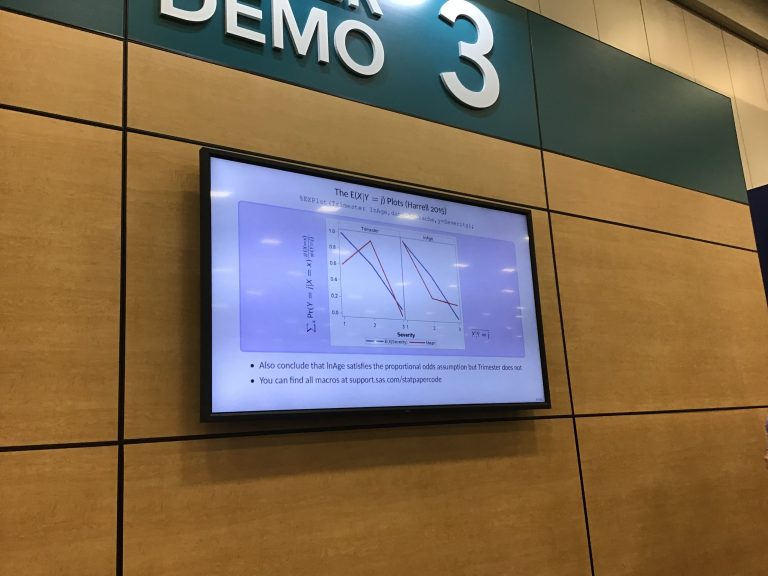

I did a random sample of presentations at SAS Global Forum today, if random is defined as of interest to me, which let’s be honest, is pretty damn random most of the time. Tip #1 Stalk Interesting People I don’t mean in a creepy showing up at their hotel room way. If you see someone…

Since the last few posts detailed errors in repeated measures with PROC GLM , I thought I should acknowledge that people seem to struggle just as much with PROC MIXED. Forgetting data needs to be multiple rows This is one of the first points of confusion for students. When you do a PROC MIXED, you…

As I said in my last post, repeated measures ANOVA seems to be one of the procedures that confuses students the most. Let’s go through two ways to do an analysis correctly and the most common mistakes. Our first example has people given an exam three times, a pretest, a posttest and a follow up…

When I teach students how to use SAS to do a repeated measures Analysis of Variance, it almost seems like those crazy foreign language majors I knew in college who were learning Portuguese and Italian at the same time. I teach how to do a repeated measures ANOVA using both PROC GLM and PROC MIXED….

Stop and read this. It may save you whole lot of grief and panic. Maybe you’ve heard that a stolen iPhone is nothing more than a brick. Perhaps you feel as if your data is safe. You have a password and it’s not 123456. You have find my iPhone. Allow me to burst your bubble…

I was going to write more about reading JSON data but that will have to wait because I’m teaching a biostatistics class and I think this will be helpful to them. What’s a codebook? If you are using even a moderately complex data set, you will want a code book. At a minimum, it will…

Sometimes data changes shape and type over time. In my case, we had a game that was given away free as a demo. We saved the player’s game state – that is, the number of points they had, objects they had earned in the game, etc. as a JSON object. Happy Day! The game became…